US and UK release joint guidelines for secure-by-design AI

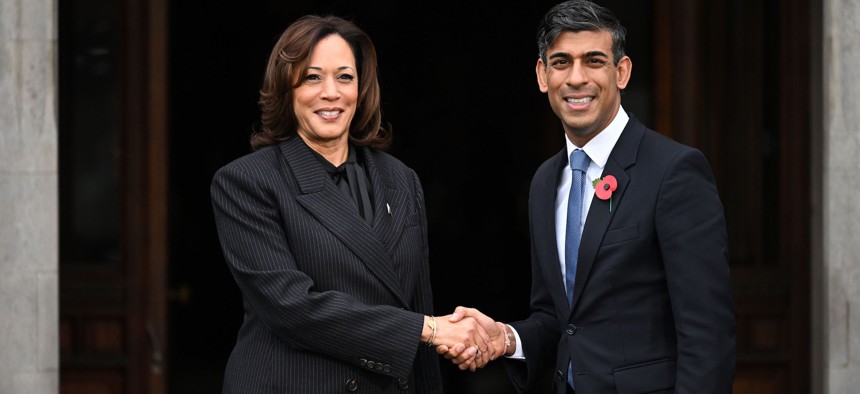

British Prime Minister Rishi Sunak (R) greets U.S. Vice President Kamala Harris on the second day of the recent AI Safety Summit at Bletchley Park in Bletchley, England. The two countries just agreed to secure-by-design guidelines for the development of AI applications. Leon Neal/Getty Images

The Guidance Secure AI System Development brings together international perspectives and consensus on what the DHS secretary said could be “the most consequential technology of our time.”

A bilateral cybersecurity effort between the United States and United Kingdom yielded new joint guidance late Sunday on how to develop secure artificial intelligence softwares in what officials call a “significant step” towards offering machine learning-specific cybersecurity protocols.

Created in tandem by the U.S. Cybersecurity Infrastructure and Security Agency and the UK’s National Cyber Security Centre — with input from 21 other global ministries and agencies — the Guidelines for Secure AI System Development serves as the first of its kind in internationally agreed-upon AI development guidance.

Experts have consistently said that it is imperative for the U.S. to lead in developing global standards for emerging technologies like AI to maintain a competitive economic and national security position.

“We are at an inflection point in the development of artificial intelligence, which may well be the most consequential technology of our time,” said Secretary of Homeland Security Alejandro Mayorkas in a press release. “Cybersecurity is key to building AI systems that are safe, secure and trustworthy.”

Implementing a secure-by-design approach to training machine learning systems is a key pillar within the guidance, which outlines four steps to mitigate cybersecurity risks and other vulnerabilities in AI systems: secure design, secure development, secure deployment, and secure operation and maintenance.

The secure design component establishes threat modeling as an integral step in mapping out AI systems and how they will function. The secure development and deployment steps recommend creating protocols for reporting these threats, which should include supply chain security, documentation, and asset and technical debt management as considerations when utilizing AI systems in operations.

The final maintenance component included in the guidance asks for constant vigilance throughout the AI software life cycle, which includes efforts like logging and monitoring, update management and information sharing.

Each step listed in the guidance aims to tackle the distinct cybersecurity risks AI and ML technologies face. Maintaining clean and uncorrupted data sets, for example, addresses one key risk, since these data will train AI algorithms how to function and make automated decisions. Therefore, controlling who has access to these and other sensitive data — along with other digital assets used in an ML model — is a major theme within the recommended guidance.

“As nations and organizations embrace the transformative power of AI, this international collaboration, led by CISA and NCSC, underscores the global dedication to fostering transparency, accountability and secure practices,” said CISA Director Jen Easterly. “The domestic and international unity in advancing secure by design principles and cultivating a resilient foundation for the safe development of AI systems worldwide could not come at a more important time in our shared technology revolution.”