FCC issues cease-and-desist order to operator linked to AI-generated Biden robocall

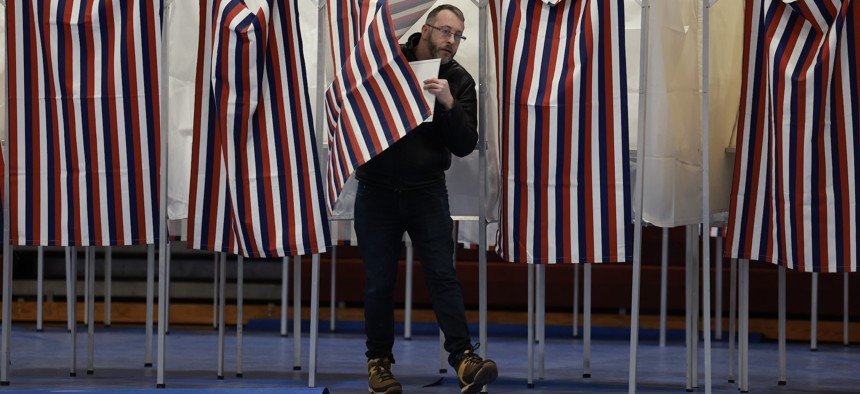

A voter fills out their ballot at a polling location at Bedford High School on January 23, 2024 in Bedford, N.H. The primary electorate was targeted with a spoof robocall that allegedly used AI to impersonate President Joe Biden. Joe Raedle/Getty Image

The agency and 51 state attorneys general are warning the company may be violating several consumer protection laws.

The Federal Communications Commission on Tuesday issued a cease-and-desist notice to Texas-based Lingo Telecom for its alleged role in originating a batch of AI-generated robocalls sounding like President Joe Biden that were deployed during the Democratic New Hampshire presidential primary last month.

The letter comes as New Hampshire Attorney General John Formella said Tuesday that the calls, purportedly aimed at dissuading residents from casting their votes, had originated in Texas from Life Corporation, owned by Walter Monk. Lingo is also a Texas-based company.

Contact information for Monk and Life Corp was not immediately available. Lingo Telecom did not immediately respond to a request for comment.

Those involved in the fake audio scheme could violate the Telephone Consumer Protection Act, the measure used by the agency to police junk calls.

“AI-generated recordings used to deceive voters have the potential to have devastating effects on the democratic election process. All voters should be on the lookout for suspicious messages and misinformation and report it as soon as they see it,” Formella said in prepared remarks embedded with the FCC’s announcement.

“Consumers deserve to know that the person on the other end of the line is exactly who they claim to be,” said FCC Chair Jessica Rosenworcel, adding, “That’s why we’re working closely with state attorneys general across the country to combat the use of voice cloning technology in robocalls being used to misinform voters and target unwitting victims of fraud.”

A group of 51 attorneys general also sent a warning to the company, urging them to cease the activities at risk of violating several state-level consumer protection measures.

The notice comes amid heightened fears that AI systems will supercharge the spread of election misinformation and disinformation. While several voters were able to recognize the falsified audio in New Hampshire, AI technologies can still be used to deploy less overt forms of disinfo that may trick individuals or media outlets into casting false information as real, policy researchers argue.

Spam and robocalling operations have been traditionally carried out in environments with human managers overseeing calling schemes, but AI technologies have automated some of these tasks, allowing robocalling operations to leverage speech and voice-generating capabilities of consumer-facing AI tools available online.

“Voting this Tuesday only enables the Republicans in their quest to elect Donald Trump again. Your vote makes a difference in November, not this Tuesday,” the deepfaked recording said at the time, two days before the primary was held on Jan. 23.

The FCC’s enforcement arm convened an investigation into the robocall scheme last month and the agency is also in the midst of a proceeding to determine how best to protect consumers from AI content in robocalls and robotexts. An FCC spokesperson told NBC News that the agency would vote in the coming weeks to criminalize most AI-generated robocalls.