Hickenlooper engages experts to develop AI safety legislation

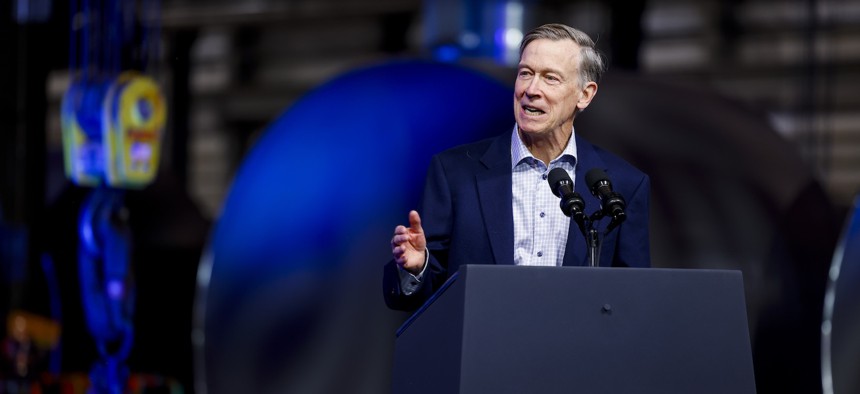

Colorado Democrat Sen. John HIckenlooper, shown here speaking at a Nov. 2023 event in his home state, is gathering support for a policy to regulate generative AI. Michael Ciaglo/Getty Images

Industry experts and other stakeholders are among entities who received a questionnaire from the Colorado Democrat that aims to inform the development of AI safety auditing policy.

Sen. John Hickenlooper, D-Colo., is circulating a questionnaire to help fellow lawmakers better understand and craft policy around auditing artificial intelligence systems’s safety and efficacy, part of the larger push for the establishment of common standards for generative AI systems.

Hickenlooper’s vision is to task the Department of Commerce to lead a consensus-driven process that will yield voluntary standards to guide independent audits of AI technologies.

The lawmaker outlined some of his vision for AI oversight in a February speech at Silicon Flatirons' 2024 Flagship Conference.

"We can’t let an industry with so many unknowns and potential harms police itself,” Hickenlooper said. "We need qualified third parties to effectively audit generative AI systems and verify their claims of compliance with federal laws and regulations."

The questionnaire, which was obtained by Nextgov/FCW, focuses on six subject areas related to AI system auditing: frequency, scope, transparency, auditing ecosystem, and compliance.

“Standards would outline the recommended performance metrics for an AI audit, detail the level of information needed to conduct an AI audit, and offer recommendations for applying an AI audit based on the level of risk an AI system is categorized in,” the document reads.

AI auditing is the broad process of testing an AI system’s inputs and outputs for high quality, safe results through an independent third party. A spokesperson for Hickenlooper confirmed that the questions are from his office, and that they are meant to inform the regulatory framework he announced in early February and potentially other legislative projects.

“These efforts are all related and we are exploring the best path forward currently,” the spokesperson said.

Hickenlooper's question about the scope of audits is particularly detailed. The lawmaker asks how potential audit standards should be tailored for developers working across a software’s architecture, mainly in the context of upstream versus downstream development.

It also asks if other criteria like direct assessments of system outputs, access to AI model training data, verification internal testing and qualitative evaluations could be included in AI auditing standards.

“There are no clear standards today on how an independent third party can test an AI system and publicly demonstrate that it was developed safely,” the questionnaire reads. “In the absence of minimum requirements, companies, researchers, and civil society advocates are proactively developing fragmented risk assessment techniques and methods to test their systems to prevent bias and ensure reliability.”

AI safety and reliability are key goals of federal agency policymakers and other lawmakers.

The National Telecommunications and Information Administration issued a request for comment in April 2023 asking for stakeholder feedback on the development of AI auditing standards policy. The National Institute of Standards and Technology also posted a request for information in late 2023, asking for feedback specifically on AI auditing standards intended for guidance.

Industry analysts support the need for standardization in AI system monitoring and evaluation criteria. Divyansh Kaushik, the Associate Director for Emerging Technologies and National Security at the Federation of American Scientists, said that Hickenlooper’s initiative to establish common standards for AI auditing are timely but emphasized a flexible approach to developing regulations.

“In a landscape where AI evolves faster than the seasons change, this proposal, while visionary, must embrace the dynamism of technology,” Kaushik told Nextgov/FCW. “Integrating mechanisms for rapid updates and adaptive standards is crucial, ensuring regulations remain not only relevant but ahead of the curve. Without this agility, we risk anchoring innovation to outdated benchmarks, stifling growth and safety.”