How Army special operators use deepfakes and drones to train for information warfare

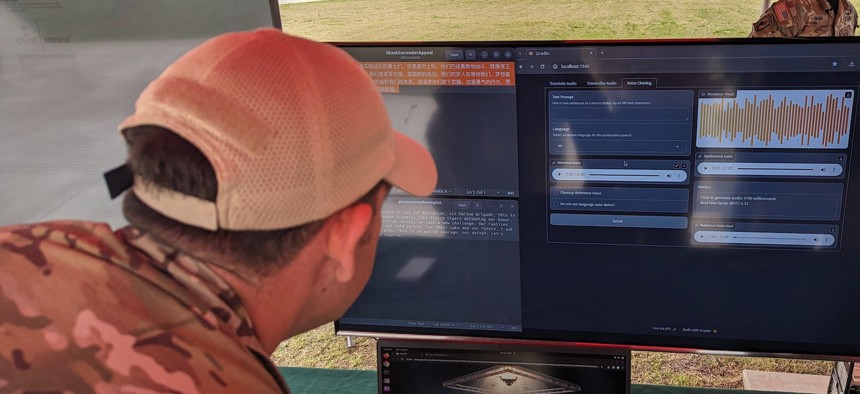

A special operations soldier demonstrates the Ghose Machine voice cloning program at the Army Special Operations Forces capabilities exercises, April 10, 2024. Defense One / Sam Skove

One soldier helped create a voice-cloning program using off-the-shelf AI.

FORT LIBERTY, North Carolina—With a sample of your voice and a gaming laptop, this Army psychological operations instructor can make you appear to say anything: an order for pizza, a call to the doctor, or just hello.

In peacetime, it’s a party trick. In war, it’s a tool that can be used for deception, luring enemies into traps, or encouraging defection by mimicking the voices of enemy soldiers.

Dubbed Ghost Machine, the tool helps Army Special Operations Forces instructors teach operators how cheap, easily-available tech is reshaping governments’ abilities to target and influence soldiers.

The basic idea behind Ghost Machine is not new—armies have broadcast the voices of collaborators to encourage allied surrender since at least World War II. In the 2010s, ARSOF members encouraged defections within Ugandan warlord Joseph Kony’s force by broadcasting messages from members’ families.

But those voice broadcasts required the physical presence and willing participation of the speaker. Now, an ARSOF soldier could theoretically copy an enemy’s commander’s voice from intercepted communications or public data, then use it to trick an enemy into thinking their commander had been captured.

The main limitations are hardware and the quantity of data on which to train the program, the instructor said, speaking at a stand displaying psychological warfare technology during ARSOF’s yearly capabilities exercise.

“If you have less performing hardware, you need a little bit more data. If you have high performing hardware, just a minute or 30 seconds [of audio] will do an outstanding job at cloning,” said the instructor, who asked to go by his first name, Achilles, due to Army Special Operations policy.

Ghost Machine is particularly good at replicating the voice of a man who runs a fake podcast for an ARSOF training scenario, thanks to the quantity of the data, Achilles said.

“We have gigabytes of his audio,” Achilles said. “When you use his voice in [Ghost Machine], it is perfect.” The copy is so good, it even accurately creates the breathing patterns and pauses of the podcaster, he added.

In one example loaded into the Ghost Machine, the program duplicated a fictional Chinese enemy commander telling his troops to surrender.

Achilles couldn’t speak to the accuracy of the automatic translation widget used to translate the English-language message into Chinese. However, he said its Spanish translation did a “really good job” based on his knowledge as a native speaker.

Achilles went through a special operations-funded project to train soldiers as software designers, then later helped launch the project. It was designed by one machine-learning engineer contractor, and took about six weeks from creation to final product.

The main costs for the program were training Achilles and hiring the contractor, he said. The program’s interface is run off the open-source Gradio app, and its algorithms are powered by commercially available artificial intelligence models.

A separate large language model used for exercises can create whole pieces of content—such as a radio script—based on the Republic of Pineland, the fictional country at the center of ARSOF’s Robin Sage exercise, Achilles said.

Getting audio in the right position for an enemy to hear it is another challenge, but other ARSOF instructors said they’re working on it.

In the past, units would set up speakers in the general direction of the enemy and pressing play. But that requires carting loudspeakers close to the front, and even then, the enemy may not hear the message if the speakers are not enormous.

Now, instructors teach students how speakers slung from drones can be flown toward enemy positions to blast messages, said Nathan, another soldier training ARSOF forces in psychological operations.

On display was a $40 speaker Nathan built out of car speaker parts—a skill he picked up growing up, he said.

The combination of speakers and drones is in part a reflection of tactics seen in Ukraine, he added, referring to numerous instances of Russian soldiers surrendering to Ukrainian drones. In one video, a Ukrainian drone drops surrender instructions to a Russian soldier, who then follows the drone back to Ukrainian lines.

The example shows how combining technological advancements with time-tested techniques— like dropping leaflets—can work, said ARSOF psychological operations soldiers.

After all, such drops require the U.S. to know the enemies’ “actual location,” adding a level of intimidation, said George, another ARSOF soldier.