Foreign-Influence Operation

rzoze19/Shutterstock.com

It’s disturbing how easily Internet Research Agency accounts blended into America’s online life.

In their efforts to influence the 2016 election, Russian operatives targeted every major social platform, but one demographic group, black Americans, got special treatment, according to two reports made public by the Senate Intelligence Committee yesterday.

The reports—one published by New Knowledge, a new disinformation-monitoring group, and the other by the Computational Propaganda Project at the University of Oxford—both tally large numbers of posts across social media that generated millions of interactions with unsuspecting Americans. New Knowledge counted up 77 million engagements on Facebook, 187 million on Instagram, and 73 million on Twitter. The think tank divvied up the activity into three buckets: content that targeted the left, the right, and … African Americans.

Partially in response to the reports, the NAACP has called for a one-day boycott of Facebook and Instagram. The NAACP president, Derrick Johnson, hit the company for allowing “the utilization of Facebook for propaganda promoting disingenuous portrayals of the African American community.”

The way politicians and journalists usually describe these Russian posts is to say that they sought to “heighten tensions between groups already wary of one another” or “exploit racial divides” by “exploiting existing political and racial divisions in American society.”

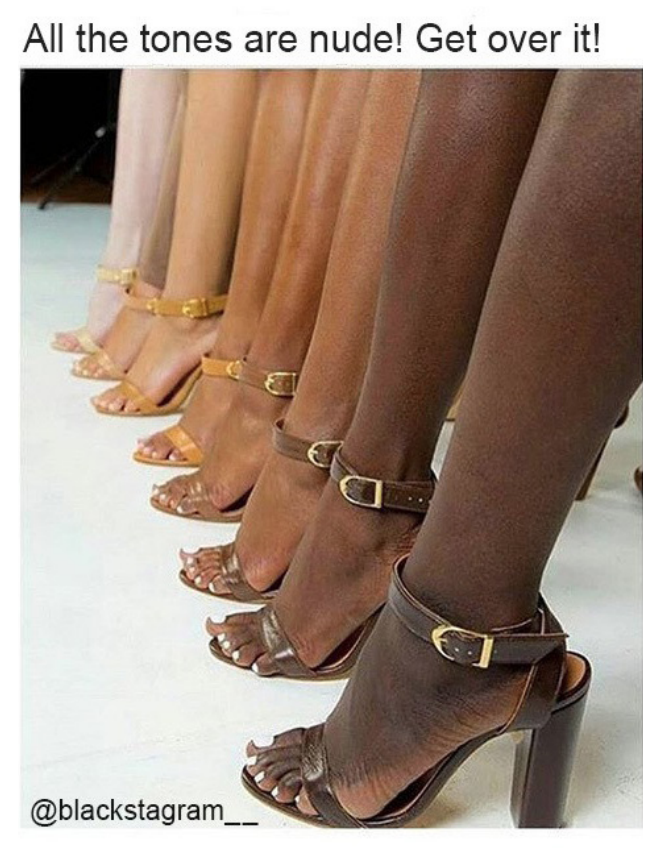

While right-leaning political posts were often explicitly racist, and both types of political posts surely tried to stoke polarization, the posts that targeted black people were different. They promoted a generally Afrocentric worldview, celebrated the freedom of black people, and called for equality. Take the following image post, which New Knowledge said generated the most likes of any Instagram post in its data set. While it was posted by a Russian-linked account, it was originally created by a black-owned leather-goods company, Kahmune.

Is this really “exploiting” racial divides or “heightening tensions”? At most, this post points out something obvious about the nature of American popular culture (calling a certain shade of beige “nude” is dumb) that makes white people mildly uncomfortable.

In another case, an IRA-controlled Facebook page reposted video footage of police brutality, garnering more than half a million shares. If that heightens racial tensions in America, it seems hard to blame the Russians for that.

The IRA operatives were able to deeply interpenetrate real black media. They became part of the meme soup of online black life, sharing and being reshared by real people, as seen below. These posts, then, created the audience that they targeted with posts arguing “that Mrs. Clinton was hostile to African American interests, or that black voters should boycott the election,” as The New York Times put it.

These posts targeting black people provide the most intense examples of the problem that Facebook faces from foreign actors. Facebook has taken down these posts, but explicitly not because of their content. Instead, the company backed into a way of targeting behavior by foreign actors. According to Facebook, these posts are bad only because they are inauthentic.

Facebook has long promoted the idea that users and posts on the service should be “authentic.” “Representing yourself with your authentic identity online encourages you to behave with the same norms that foster trust and respect in your daily life offline,” the company wrote in a letter to shareholders ahead of its IPO in February 2012. “Authentic identity is core to the Facebook experience, and we believe that it is central to the future of the web.”

For several years, the company emphasized the need to maintain “authentic relationships,” but primarily in the context of people and companies buying “fake likes” for their pages. Authenticity was a business principle, not a political one. “Businesses won’t achieve results and could end up doing less business on Facebook if the people they’re connected to aren’t real,” the company explained in 2014. “It’s in our best interest to make sure that interactions are authentic.”

When the Russian influence operation began to be excavated in the wake of the 2016 election, Facebook began to use the phrase “coordinated inauthentic behavior.” Facebook cleverly adapted a policy that was designed to fight spam to fight the activities of foreign actors. It’s an understandable policy shift meant to connect the specific fight around electoral interference to this core Facebook value of “authenticity.”

At the same time, the entire enterprise of “influencer” marketing sure seems like coordinated inauthentic behavior. But financial motivations are automatically deemed authentic and legitimate. Teams of people are recruited from across the world to promote products and ideas that may be dubious.

Also, there are plenty of businesses that use racial-solidarity themes to sell products. Many others drive much more directly at polarizing issues—like gun control—to do the same. One large political-action group spawned a flock of disguised pages to promote candidates and issues, yet they were also seen by Facebook as playing by the rules.

If the Russians had simply been regular businesses with products to sell or a media empire to build, what they did, in the vast majority of cases, would have been fine. Even American political actors working on behalf of global oil companies to, say, thwart climate-change action would be deemed authentic.

All of which should make the next step for Russian operatives obvious: Simply create a consulting business and do exactly what they did before, but this time with a profit motive.

On the other hand, as some skeptics of Russian influence have pointed out, their posts represent a tiny, tiny, tiny fraction of all the polarizing content—let alone total political content—flowing through these massive social platforms. A “legitimate” media business with their results probably would be considered no big deal. Their main influence on American elections may have resulted from the revelation that they’d been involved at all, not from any actual effect that they had on the election.

They paid for ads in rubles. They did not want Americans to know what they’d done. And the reaction to their campaign on social media likely destabilized political discourse far more than any of their actual efforts.

That also helps explain what’s going on with the posts targeting black people. Russian governments have long enjoyed poking the United States about the country’s treatment of African Americans. If celebrating equality for black people or protesting black people’s treatment by police is seen as exploiting American racial divisions, that says a lot about the country, in and of itself.