House Dems seek guardrails for law enforcement’s use of facial recognition

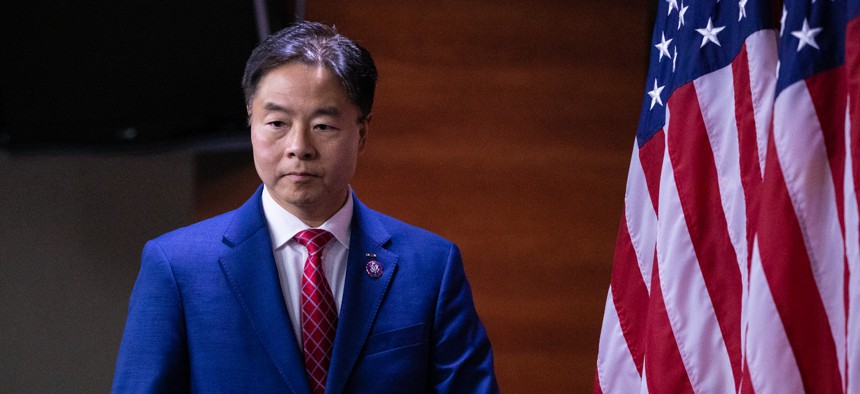

Rep. Ted Lieu, D-Calif., was joined by five other House Democrats in reintroducing the legislation to restrict law enforcement use of facial recognition tech. Anna Rose Layden/Getty Images

The bill would require agencies to obtain a warrant showing probable cause that an individual has committed “a serious violent felony” before facial recognition can be used.

A group of House Democrats reintroduced legislation on Friday to curtail law enforcement’s use of facial recognition software, citing concerns about how unrestricted use of the technology could erode Americans’ constitutional rights.

The Facial Recognition Act would limit and, in some cases, prohibit law enforcement agencies at the federal, state and local levels from using the surveillance tools, while also requiring more transparency when it comes to the deployment and use of the technology.

The bill would scale back officials’ use of the technology “to situations when a warrant is obtained that shows probable cause that an individual committed a serious violent felony,” according to a summary of the legislation.

The Facial recognition Act was sponsored by Rep. Ted Lieu, D-Calif., who previously introduced the bill in September 2022. Reps. Yvette Clarke, D-N.Y.; Jimmy Gomez, D-Calif.; Glenn Ivey, D-Md.; Sheila Jackson Lee, D-Texas; and Marc Veasey, D-Texas, co-sponsored the latest version of the proposal.

In a statement, Lieu said the legislation “puts forth sensible guardrails that will protect the privacy of Americans against a flawed, unregulated and at times discriminatory technology.”

Law enforcement officials would also be barred from using a positive facial recognition match as “the sole basis upon which probable cause can be established,” and would also be prohibited from using facial surveillance software to enforce immigration laws. Agencies would also not be allowed to use the technology “to create a record documenting how an individual expresses rights guaranteed by the Constitution.”

In addition to imposing restrictions on the use of facial recognition, the bill seeks to enhance accountability and transparency around agencies’ utilization of the surveillance software.

Officials would be required to notify individuals who are the subject of a facial recognition search, and the legislation would also establish “a private right of action for individuals harmed” by the technology’s use. Additionally, agencies would be required to regularly audit their systems and conduct annual independent testing of their facial recognition tools.

The bill’s sponsors said safeguards are needed to prevent the technology — which has been criticized for having a discriminatory bias — from being broadly used to police communities and identify protesters at peaceful rallies.

In a statement, Clarke warned that flaws in facial recognition tools “can have real-world consequences,” including when it comes to “its ability to misidentify women and people of color.”

A federal study released by the National Institute of Standards and Technology in December 2019 found that facial recognition technologies misidentified Asian and African American individuals 10 to 100 times more often than white individuals, raising concerns about the software’s effectiveness as a policing tool.